This article provides insights into consumers' current usage of voice technology and practical steps for marketers who want to take advantage of this growing trend.

- A survey of current voice technology usage in nine countries found that on average 47% of smartphone users employ voice technology of some kind at least once a month and 31% use it at least weekly, equating to almost 600 million people.

- The current voice landscape is dominated by the tech giants, with Amazon's Alexa and Echo, Google Home, Apple's HomePod and Baidu's Little Fish in China all competing to become market leaders.

- Brands can use voice technology either by building experiences that are accessed through voice assistants, e.g. a voice assistant app, like Alexa skills, or by developing voice capabilities within their owned assets, such as their products (e.g. voice activated white goods), their packaging or their ads (e.g. voice interactive audio ads).

- The primary motivation for using voice is efficiency, so the focus for any brand should be on designing an experience which is faster, simpler or easier than alternative modes of interaction.

- Voice interaction provides a great opportunity to convey brand value and project personality, but beware of creating something that seems so lifelike that users may find it creepy.

Voice technology is sweeping the world, as assistants like Amazon's Alexa, Google Assistant and smart speakers like China's "Little Fish" capture consumers' imagination.

Developments in speech recognition and natural language processing (NLP) mean we can now talk to computers in a way considered science fiction just a few years ago. At just five percent, speech recognition error rates are now at human parity and improving all the time.

1

Voice is fundamentally a highly intuitive way to interact and, as such, there is the potential for it to become consumers' primary means of interaction with technology over the coming years.

To explore this further, Mindshare Futures and the J. Walter Thompson Innovation Group recently commissioned a global study of voice technology called

Speak Easy, spanning nine countries (Australia, China, Germany, Japan, Singapore, Spain, Thailand, U.K., and U.S.). This involved qualitative and quantitative research, expert interviews and a neuroscientific experiment with Neuro Insight measuring brain activity during voice interactions.

This paper explores some of the findings from Speak Easy and presents practical steps for marketers to follow as voice technology uptake grows.

Definitions

- Voice technology: A means of interacting with a device using voice as the input mechanism. Currently, voice technology is most commonly used with the smartphone or smart speaker but is rapidly being integrated into other objects.

- Voice assistants: These are tools powered by artificial intelligence (AI), often developed by the major tech players. Brand-agnostic, they currently answer simple queries or carry out basic commands, but aim to act as a user's trusted advisor at each step of their daily routine. Originally mobile-based, they are now integrated into smart speakers and other connected devices and work across multiple platforms.

- Voice search: This describes the ability for users to input search queries into a search engine via voice. Results are typically displayed graphically.

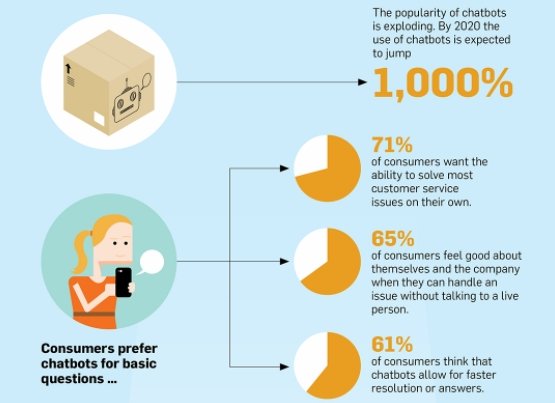

- Chatbots: Software designed to carry out conversations with human users, usually by a brand. While similar to a voice assistant, the scope of a chatbot is much stricter. Chatbots are typically text-based, though they are increasingly built with voice input, especially in Asia.

Where to start

The Voice Landscape

The current voice landscape is dominated by the tech giants that offer propositions on a global scale:

- Amazon: Powered by voice assistant Alexa, the Amazon Echo first went on sale in the US in 2014 followed by the UK and Germany in 2016. Alexa hosts third-party skills, which function much like apps but over a voice-user interface (VUI). Amazon has also made Alexa available to hardware developers as Alexa Voice Service to build into its own products in an attempt to stimulate the market.

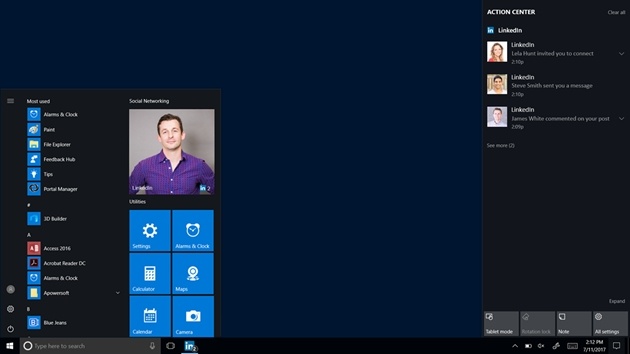

- Google: The company launched Home, its domestic hardware equivalent to Echo, in the US in 2016 and later expanded into the UK in April 2017. Home allows third parties to create Actions, which are the equivalent of Amazon's skills. It is powered by Assistant, the voice assistant that is currently available on over 100m devices, including the iPhone through the Allo messaging platform.

- Apple: This voice pioneer launched its voice assistant Siri in 2011. Late to the smart speaker category, Apple announced the launch of its HomePod speaker at the Apple WWDC in June 2017. It has also integrated Siri into wearables (the Apple Watch and AirPods).

- Asian market: Chinese search giant Baidu unveiled its smart speaker Xiaoyu Zaijia - or Little Fish - at CES in January 2017. JD.com has also launched LingLong DingDong in China, a home speaker taking some design cues from the Echo. Most recently, Alibaba announced its own smart speaker called Tmall Genie. Samsung has its own voice assistant Bixby in the Galaxy phones and is expected to build voice interfaces into all its consumer products over the coming years, following the company's 2016 acquisition of Viv, the AI built by Siri's original developers.

- Beyond assistants: We are also witnessing a rise in:

- Voice-activated social robots: SoftBank's Pepper, a child-sized robot, has been used in the Hilton McLean hotel in the U.S. as well as two Belgian hospitals to greet visitors.

- In-product voice: Manufacturers as diverse as Mattel and Ford are building in voice capability to enhance their products. Voice interfaces are also being built into the retail environment, signage and packaging.

Current usage

Across the nine countries surveyed by the Speak Easy study, on average 47% of smartphone users employ voice technology of some kind at least once a month and 31% use it at least weekly. That equates to almost 600 million people - more than the population of the U.S. and Brazil combined - already engaging with this new user interface.

Voice usage can be split into two main types; tasks carried out entirely by voice, and tasks that are initiated by voice and completed on screen.

Currently, tasks best suited to voice interaction are simple enough that both the question and response may be delivered through this interface. Examples include setting an alarm, playing music or asking a question, for instance, "Alexa, what's the weather like today?".

As voice assistants become more intelligent and support more complex dialogue, opportunities for "100%" voice interactions should grow. An early example is the Johnnie Walker skill for Alexa, which initiates a back-and-forth conversation, posing questions to deliver the perfect whisky recommendation for users.

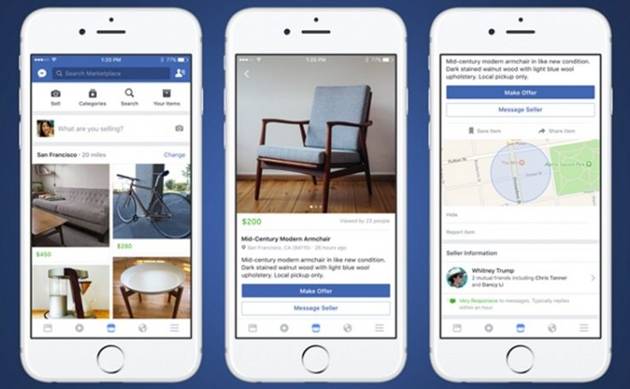

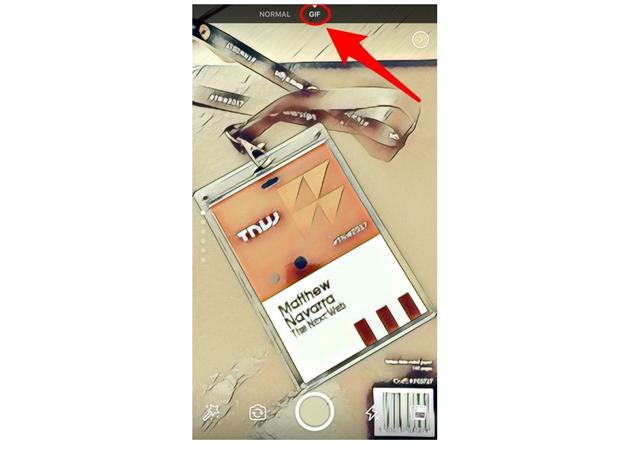

Despite these developments, the interplay between voice and screen is likely to remain important. This is particularly true where interactions involve reviewing a range of options or seeing what a product looks like. The Chinese search engine, Baidu released its first smart speaker with a screen (Xiaoyu Zaijia - or "Little Fish"), and Amazon has recently launched a new screen-based smart speaker called Echo Show to address these use cases.

Currently consumers prefer to use voice in private spaces, particularly the home where smart speakers such as Echo or DingDong thrive.

The car also provides a suitable space for voice technology, allowing drivers to multi-task, hands-free. This is particularly true in car-centric markets such as the US, where 65% of regular voice users carry out voice interactions while driving (versus 40% globally).

There is a reluctance from many to use voice in public spaces, particularly in countries where speaking out loud in public may be seen as culturally inappropriate, such as Japan. 72% of regular voice users there say, "I would feel too embarrassed to use voice technology in public" (versus 57% globally).

"As we use it more in the home and the car, people will start to get more used to it, and the feeling daft factor will fade away a bit."

Duncan Anderson, former chief technology officer, IBM Watson Europe

As people become desensitized, public usage may increase in some markets. Voice-responsive headphones such as Apple's AirPods or Doppler Labs' Here One could prove popular by creating semi-private interactions.

Motivations for usage

The Speak Easy research identified efficiency as a primary motivation for using voice. The top three reasons for use amongst regular voice users globally were "it's convenient" (52%), "I don't have to type" (48%), and "it's simple to use" (46%).

Additional research tapped neuroscience to investigate the brain's response to voice interactions, compared to touch or typing. It found that voice interactions showed consistently lower levels of brain activity than their touch equivalent, indicating that voice response is less taxing than its screen-based equivalent. This helps explain why efficiency is such an important motivation for using voice technology.

Beyond efficiency, potential voice users are looking for guarantees around their personal privacy, a better understanding of the impact the service could make on their lives, and a promise that the technology will seamlessly integrate into their lives.

Essentials

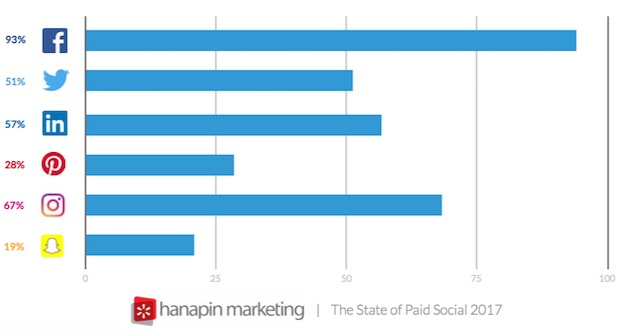

Broadly there are two ways marketers can currently take advantage of the rise of voice technology.

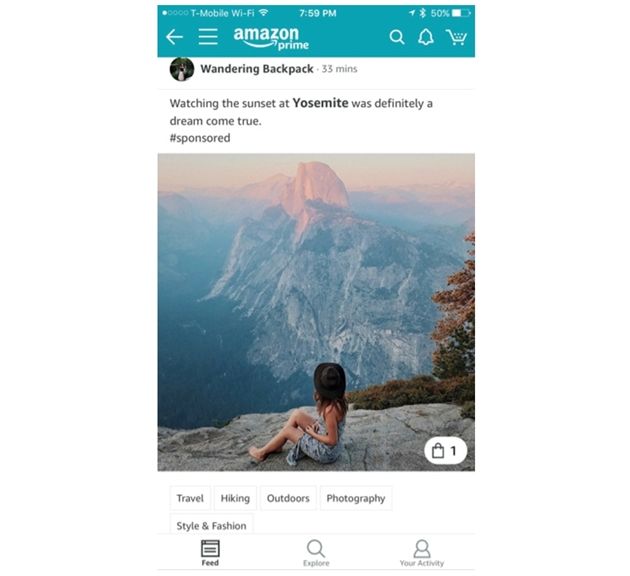

Firstly, they can look to build voice experiences that are accessed through voice assistants. This could be a voice assistant app, like Alexa skills, Actions for Assistant or Dingdong services, which are designed for use with their respective platforms and carry out specific roles. More simply, this could include ensuring that brand content can be surfaced by an assistant query, which involves a combination of SEO principles and iterative testing.

Secondly, brands can look to develop voice capabilities within their owned assets. This could be within the products themselves (e.g. voice activated white goods), within packaging (at CES 2017 Cambridge Consultants launched talking packaging for pharmaceuticals), within their ads (e.g. voice interactive audio ads) or their owned real estate (e.g. retail outlets).

Irrespective of the type of voice interaction a brand builds, there is a series of core principles that need to be applied when designing any voice experience.

1. Find the 'micro moments' where voice interaction adds genuine utility

A voice interaction should offer greater value than alternative modes of interaction to avoid falling into the trap of technology for technology's sake. As the primary motivation for using voice is efficiency, the focus should be on designing an experience which is faster, simpler or easier.

As with any brand engagement, there is a real need to interrogate the consumer journey and understand the target's relationship with the brand. Key to voice interactions, brands should identify brief but powerful opportunities in a consumer's life to reduce pain points or friction, otherwise known as 'micro moments'.

These micro moments could be identified at different stages along the consumer journey and serve different marketing purposes:

- Advice: Providing in-the-moment advice while the consumer is using the product to enhance brand perceptions. An early example here is the Tide stain skill which provides voice instructions on how to deal with particular stains.

- Reduce friction: Minimising the friction around purchase to help drive loyalty and higher revenue per customer. Baidu's Duer taps into this by allowing people to place restaurant reservations, buy movie tickets and book flights through simple voice commands.

- Customer experience: Helping people get more out of using the product or service to improve the overall customer experience and retention. The latest Sky Q remote comes with a voice button linked to a microphone which users can speak into to search for content.

2. Find your voice

Voice interaction offers great potential for brands because is it has been proven to elicit a stronger emotional reaction from people than its text equivalent. Neuroscientific research showed that, when carrying out a task, respondents displayed double the emotional response when speaking a brand name versus typing it.

Voice also provides a great opportunity to convey brand value by building a sound experience that projects personality and effectively utilises the right tone of voice. People are hardwired to look for human-like patterns in inanimate objects (a concept called pareidolia). Consumers will project a personality onto a brand, whether the brand wants it or not.

According to Martin Reddy, co-founder and chief technology officer of PullString, the bot developer: "It is interesting, when something acts naturally and human back to you, how much we imbue it with sentience, with human personality."

However, in developing a brand persona, one of the potential pitfalls is to create something which seems so lifelike that it feels creepy, a concept known as the 'uncanny valley'. To avoid this, the trick is to convey brand personality without pretending to be human.

In addition to persona, voice experience designers should reflect on the context and emotional state of the user, and responding accordingly. Lateral thinking, that explores potential interactions that fall outside core use cases, is also critical. Voice strategy should consider where the conversation might lead and how best the brand should react. For example, how should a brand respond if people ask it about competitors or green credentials?

3. Design 'conversation first'

Designing for a VUI is different to other forms of digital interaction. The rules are still being written, so experiment and learn. Here are some starting points:

- Guide people's queries with suggestions, but don't give them long lists or too many open-ended questions.

- Human conversations are based around regular 'turn taking'. Provide appropriate amounts of questions with the chance for the user to respond and refine.

- Think multi-modal. Work out what works best via voice only (more likely to be quick, simple instructions or tasks) and what would benefit from a screen (presenting choices such as 'suggestion chips' in Assistant, or demonstrating with video clips).

- If users are logged in to your platform during the voice interaction, work out what information can be surfaced to personalise the experience.

- There will inevitably be instances where your voice experience doesn't understand the user. Think about how you deal with these dead ends. Suggest alternative options rather than keep repeating 'I don't understand'. For some brands, particularly where the experience is focused on customer service, it may make sense to divert the user to a human call centre agent.

4. Focus on discoverability

Voice assistants are going to become increasingly influential gatekeepers to the consumer, so ensuring they surface your brand will become more and more important.

For voice search, look at longtail key words for your paid search spend, focusing on more conversational phrases. Think how your SEO could be enhanced for voice search, for example using FAQs reflecting voice queries within your site.

Both Alexa skills and Dingdong services need to be enabled by the user and with over 10,000 skills now available, discoverability is a challenge. By contrast, Google Assistant Actions can be used without prior activation. Whether an Action is surfaced without being mentioned by name ('implicit invocation' as Google terms it) is dependent on Assistant deeming it the best result.

Brands need to adopt a two-pronged approach. Firstly, they should use paid and owned media to encourage users to seek them out directly. In addition, they should engage in next generation SEO or 'algorithm optimisation' to make sure the voice assistants surface their voice skills or Actions.

5. Test and iterate

Launching a voice experience is just the start. People will behave in unexpected ways beyond all the potential testing scenarios and ask unexpected questions. This is particularly the case if a voice experience is being developed for multiple markets (e.g. US & UK) as cultural and language differences will throw up local nuances. So, it is critical that in planning a voice experience, brands retain resource to quickly adapt the experience once there is more data on how people really behave with it.

Checklist

Some key questions to ask when developing a voice experience:

- Be brutally honest and ask if your voice experience offers genuine utility over other interaction methods.

- Does the persona of the voice experience properly portray what the brand stands for?

- Do you have the right balance between voice and screen interactions?

- Do you have the right plan in place to ensure people know about and can find what you've designed?

- Are you prepared to iterate rapidly after the launch of your design?

Case Studies

IBM Watson & Pinacoteca de São Paulo

70% of Brazilians have never visited a museum or cultural center. The Pinacoteca de São Paulo art gallery set out to change this using voice technology. Visitors can ask IBM's cognitive assistant about art pieces shown at the museum via a smartphone equipped with the mobile 'Voice of Art' app. By explaining the stories behind the pieces and their historical context, the voice assistant inspires greater interest in art.

HelloGbye app

Launched in 2017 in the U.S., the HelloGbye app aims to help travellers plan, book or change travel plans. Operating across both desktop and mobile, users can use voice to enter requests and refine arrangements with a chatbot. It aims to tap into voice's promise of a simpler, more intuitive interface to help with the travel booking process which is notoriously cumbersome.

Starbucks, Alexa & Ford

The car is a powerful environment for voice technology because of its hands-free demands. Starbucks has identified a micro moment in the consumer journey where customers could place a drive-by order whilst en-route. Ford vehicles in the US equipped with its

SYNC3 voice-activated technology will be able to order from Starbucks by saying, "Alexa, ask Starbucks to start my order," utilising Alexa which is built in to the car.

Further Reading

Books: